Abstract your cluster provisioning away with Crossplane and ClusterAPI (TKG on vSphere)

I've been taking my first steps in Crossplane recently, and I wanted to share some of my findings, opinions, and a handy way to abstract cluster provisioning away, creating a composition, "Project" which essentially is a cluster with some managed resources running on it.

Crossplane is known by many as a solution for managing resources external to your Kubernetes cluster, as Kubernetes resources. I have heard of it before as a "Terraform (or should I say, OpenTofu) which runs in Kubernetes". The comparison is indeed valid; instead of having a Terraform state, you have resources in your Kubernetes cluster's ETCD store; and instead of HCL, you use YAML. What Crossplane does is to record the creation of external objects (as CRDs), and make use of providers in order to create those resources on the desired backends. Yes, you read right, resources get recorded as CRDs.

But not everything needs to be a CRD! - Not me

The fact that they are CRDs in your cluster, allows you to create Compositions (same concept as OpenTofu modules), so you can start abstracting your platform away! You can now leverage the power of k8s API without having to build custom operators.

While Crossplane is mostly known for creating and managing resources which are external to Kubernetes clusters (commonly from Cloud providers), something which I thing gets overlooked is the possibility of easily building abstractions over resources running anywhere, and yes, that also includes Kubernetes resources. Like many others, I am already building abstractions over Kubernetes and non-Kubernetes resources with Helm Charts and Terraform modules, but to me this seems taking it a step forward towards further integration and coupling of platform components. Additionally, building these abstractions, and constraining the developer to only create objects of that kind, minimizes their exposure to Kubernetes' internals, therefore their cognitive load when migrating/deploying to Kubernetes.

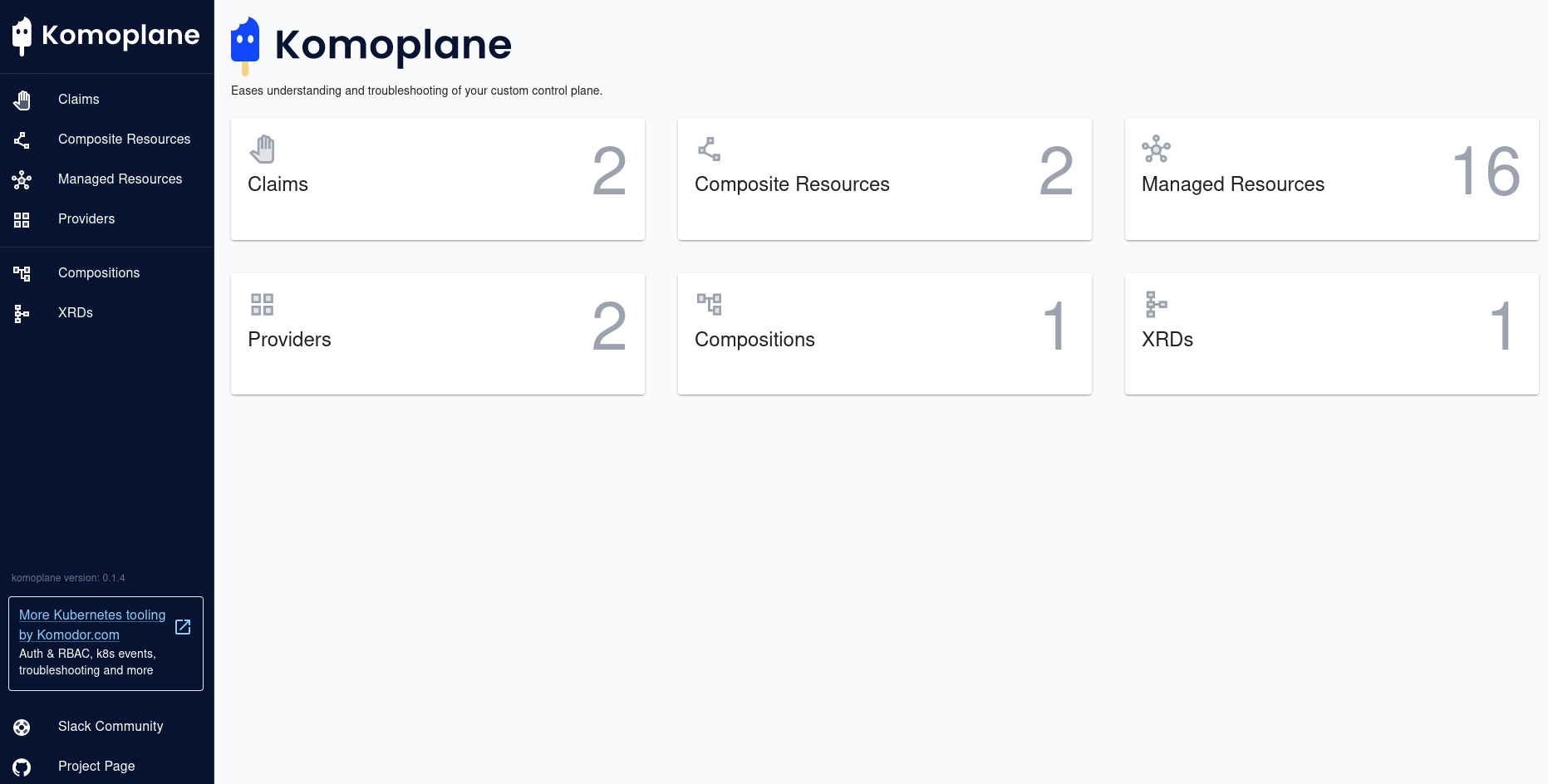

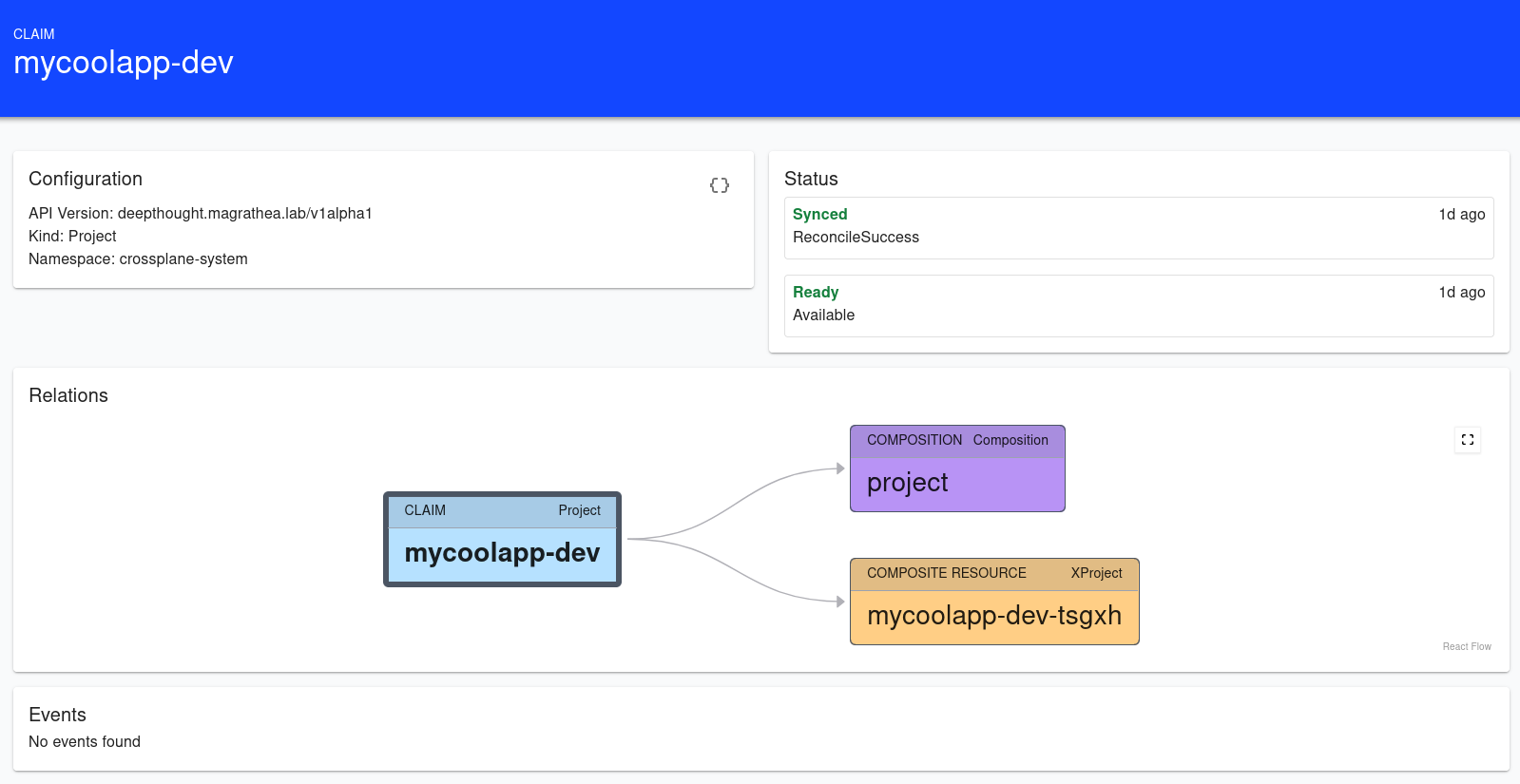

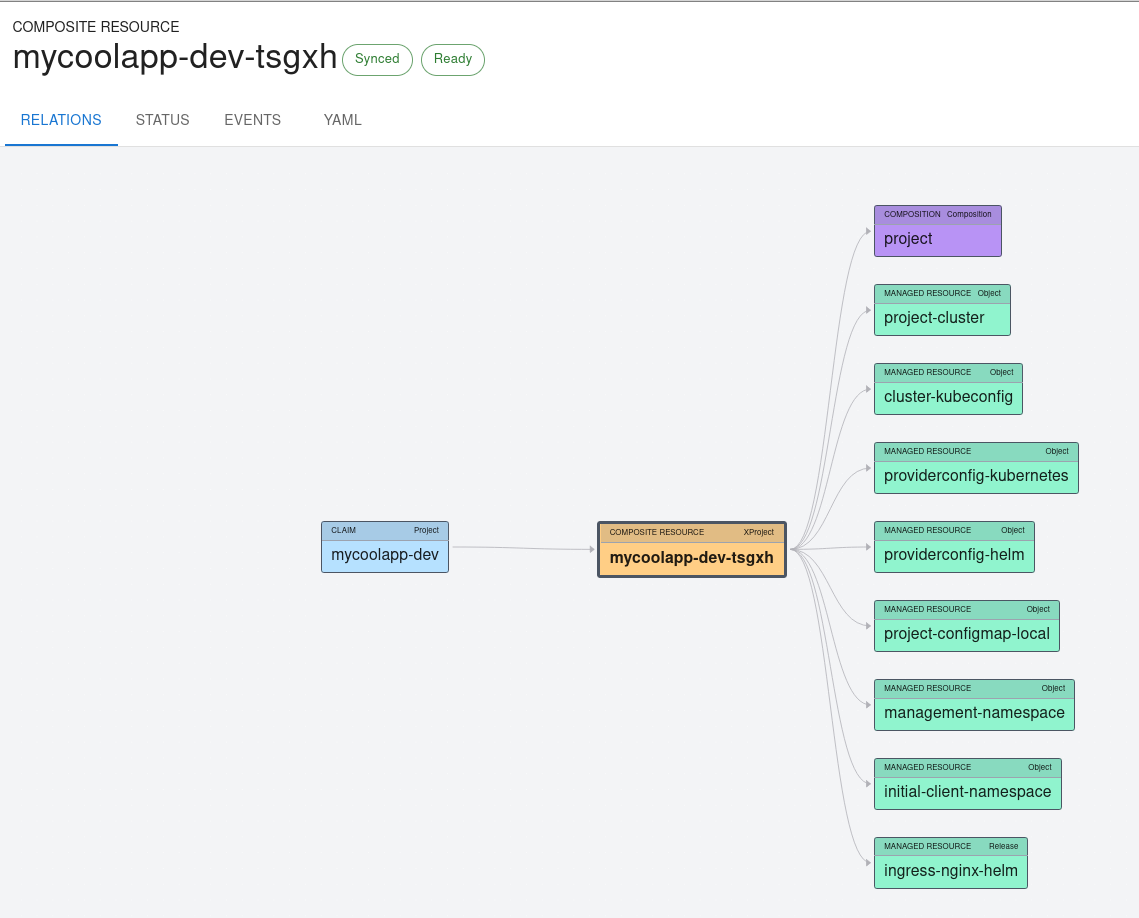

Komoplane - UI for Crossplane

For this we have to thank folks at Komodor, who created a straight-forward UI for visualizing the managed resources, compositions, claims... It also includes events and statuses which come handy for troubleshooting. I will add some screenshots from komoplane to give a better overview of the resources.

Case Study: Managing clusters and their resources with Crossplane

Usually, if providing clusters on any public cloud like GCP, you would just install the Crossplane provider "provider-gcp", and a ProviderConfig including your login credentials to Google Cloud. In my case, I will be provisioning clusters in my homelab with Tanzu Kubernetes Grid with Supervisor cluster. Although there is no official or community built "provider-tkg", it is not really needed, because TKG clusters are created as ClusterAPI "cluster" resources, deployed in the Supervisor Cluster. Check VMware's docs for the example of a cluster definition for TKG 2.2,

My goal is to provision clusters, as well as namespaces, rbac and an example helm chart (ingress-nginx) for the potential consumer of the cluster.

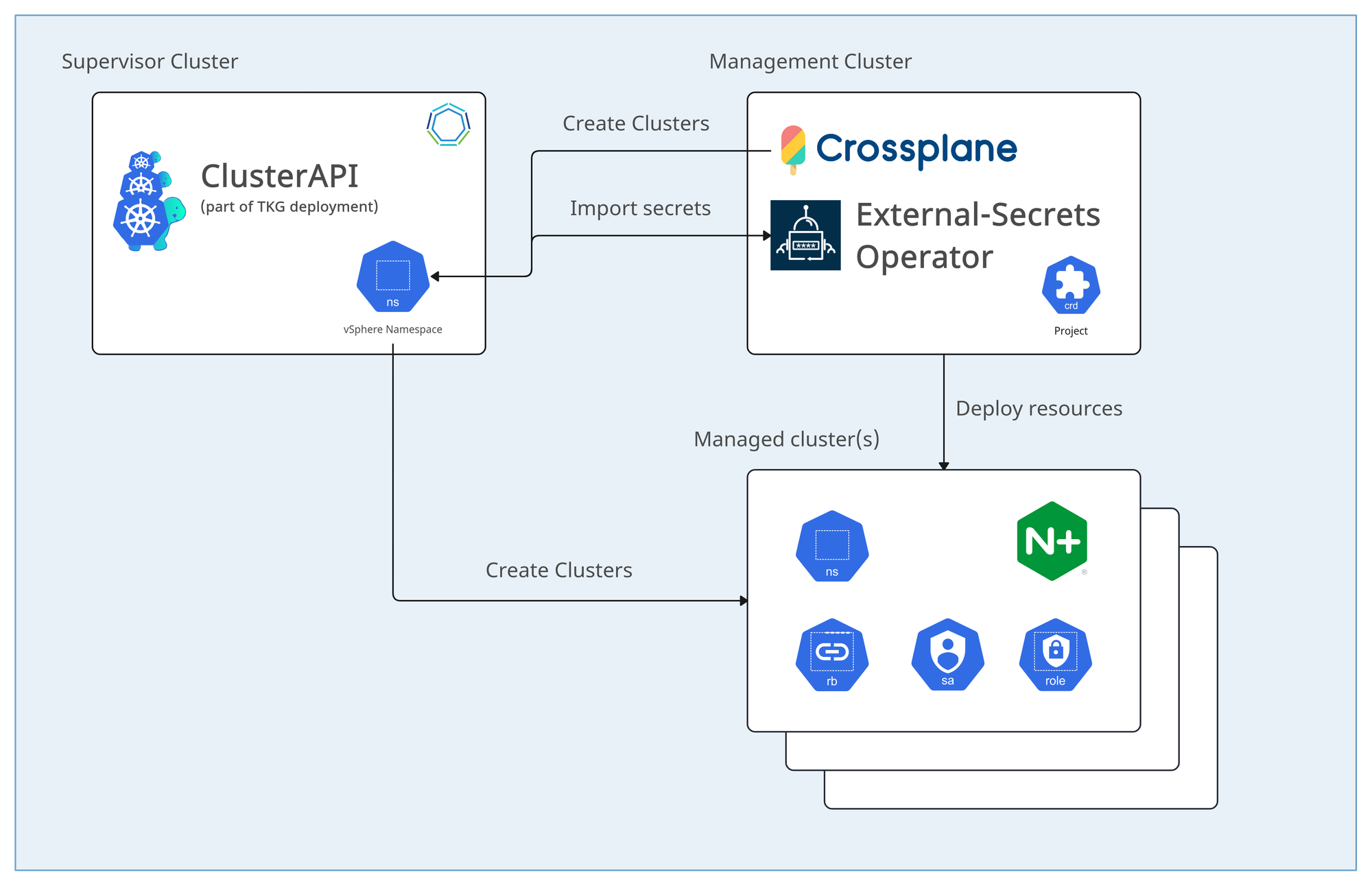

But I am not going to run my main control plane on the Supervisor cluster. First, because the point of the Supervisor cluster is to provide and manage the underlying vSphere resources, and secondly because I don't need to. Because Crossplane allows me to combine resources running in different backends into one kubernetes resources, thanks to Crossplane Compositions. So, my first composition is "Project", which will deploy:

ON SUPERVISOR CLUSTER:

- Cluster (ClusterAPI clusterclass: tanzukubernetescluster)

ON MANAGEMENT CLUSTER:

- ExternalSecret (in order to import the managed cluster's kubeconfig from the Supervisor cluster)

- ConfigMap (with some data about the created cluster)

- ProviderConfig (to enable deployment of k8s resources in newly created cluster)

- ProviderConfig (to enable deployment of helm charts in newly created cluster)

ON MANAGED CLUSTER(S):

- Development Namespace

- Management Namespace (for managed applications)

- RBAC (constraining user to only deploy into the development Namespace)

- Managed applications:

- NGINX ingress controller (helm chart)

An image is worth a thousand words so check out the diagram below to get an overview:

1 - Configure Crossplane

Everything contained in this post is available in my Github repo crossplane-tkg.

git clone https://github.com/mestredelpino/crossplane-tkg.git

cd crossplane-tkg/

NOTE: Make sure to have Crossplane running on your management cluster.

Deploy and configure providers

Although all Kubernetes API resources are already defined, Crossplane can only create compositions from Custom Resource Defintions. Since we need for our Crossplane instance (in the management cluster) to be able to create ClusterAPI resources in the Supervisor cluster, we will first deploy provider-kubernetes. We will also deploy provider-helm to manage helm chart installations.

We will also create a DeploymentRuntimeConfig for provider-kubernetes, to set the --debug flag, and to give the provider's deployment a known, static name:

kubectl -n crossplane-system apply -f management/providers

Your provider will also need necessary permissions to manage ExternalSecrets and ProviderConfigs for both Helm and Kubernetes providers. We will just create some cluster roles and bind them to the service account we specified in the DeploymentRuntimeConfig.:

kubectl -n crossplane-system apply -f providers/rbac.yaml

Create deployment targets (Crossplane ProvividerConfig)

In the management cluster, we will create two ProviderConfigs, one to deploy kubernetes resources into the management cluster itself, and another one targeting the Supervisor cluster. In order to target specific clusters, we need to add a ProviderConfig, which can be referenced by our Compositions:

apiVersion: kubernetes.crossplane.io/v1alpha1

kind: ProviderConfig

metadata:

name: provider-kubernetes-local

namespace: crossplane-system

spec:

credentials:

source: InjectedIdentity

---

apiVersion: kubernetes.crossplane.io/v1alpha1

kind: ProviderConfig

metadata:

name: provider-kubernetes-supervisor

namespace: crossplane-system

spec:

credentials:

source: Secret

secretRef:

namespace: crossplane-system

name: supervisor-kubeconfig

key: kubeconfig

The provider config references a secret which contains the supervisor's kubeconfig. The idea is to use crossplane to create clusters (targetting the Supervisor cluster),

KUBECONFIG=`cat <YOUR_SUPERVISOR_KUBECONFIG_FILE>`

kubectl -n crossplane-system create secret generic supervisor-kubeconfig --from-literal=kubeconfig="${KUBECONFIG}"

TANZU WITH SUPERVISOR SPECIFIC:

While your kubeconfig (which you got with Tanzu CLI) will be enough for Crossplane to create the clusters, it will not be able to observe them after, so they will be on Ready state, but not Synced. You will get the following warning message:

Warning: CannotObserveExternalResource managed/object.kubernetes.crossplane.io cannot get object: failed to get API group resources: unable to retrieve the complete list of server APIs: cluster.x-k8s.io/v1beta1: Unauthorized

To fix this, you can should create an identity for it, and get a kubeconfig. For experimentation, I just used the "kubernetes-admin" kubeconfig, which you can get by sshing into any of the Supervisor nodes. You can follow William Lam's post on this, and get the kubeconfig from there.

Define the abstraction (Crossplane Compositions)

Now let's deploy the definition of our "project" composition, as well as the XRD's needed to deploy resources with that composition's blueprint:

Apply Compositions and CompositeResourceDefinitions:

kubectl apply -f crossplane/project-composition.yaml

kubectl apply -f crossplane/project-composite-rd.yaml

Since one of the resources in the project composition is an ExternalSecret to import the new cluster's kubeconfig, we will have to install and configure External-Secrets-Operator.

2 - Configure External-Secrets-Operator

When creating a Tanzu Kubernetes Cluster with ClusterAPI, it creates a cluster, but it also creates important secrets such as the cluster's kubeconfig or the nodes' main user password (for ssh), among others. In this case, we are mostly interested in importing the new clusters' kubeconfig into the management cluster, so that Crossplane can deploy resources into the managed clusters.

NOTE: Ideally, Tanzu would include some sort of Vault integration at deployment, so that all Supervisor cluster secrets get stored in Vault. You could then use external secrets to import those secrets from Vault. You could probably just set this up yourself, but that is out of scope of this post. Having said that, we can still use external-secrets' provider-kubernetes to import the secrets directly from the Supervisor cluster. Let's set that up then.

ON SUPERVISOR CLUSTER:

- Create ServiceAccount, Role and RoleBinding for External-Secrets (running on management cluster)

kubectl -n <YOUR_VSPHERE_NAMESPACE> apply -f supervisor/external-secrets/rbac.yaml

- Now get the token from the secret associated to the new service account:

eso_token_secret_name=`kubectl get secrets -n <YOUR_VSPHERE_NAMESPACE> -o name | grep external-secrets-mgmt-read-token | sed -e 's/secret\///g'`

eso_token=`kubectl -n <YOUR_VSPHERE_NAMESPACE> get secret $eso_token_secret_name -o=jsonpath='{.data.token}' | base64 -d`

- You will also need your cluster's certificate:

</dev/null openssl s_client -connect <SUPERVISOR_CLUSTER_IP>:6443 -servername <SERVER_NAME> | openssl x509 > supervisor.crt

ON MANAGEMENT CLUSTER:

- Create a ConfigMap containing the Supervisor's root CA certificate, and a secret with the token for External-Secrets:

kubectl -n crossplane-system create cm supervisor-kube-root-ca --from-file=supervisor.crt

kubectl -n crossplane-system create secret generic eso-supervisor-token --from-literal=token=$eso_token

- Now, create the SecretStore:

kubectl apply -f management/external-secrets/supervisor-secretstore.yaml

3 - Deploy projects

Having Crossplane, the providers, and External-Secrets configured, we can now just create two example projects: "mycoolapp-dev" and "mycoolapp-prod"

kubectl apply -f /home/mescar/git/crossplane-tkg/examples/project.yaml

Now you will have to wait a bit for the clusters to be created, but Crossplane will eventually get the managed clusters' kubeconfigs and deploy NGINX and other resources on them.

And that's it, we now provisioned a cluster and created resources on three clusters with a single Kubernetes resource, based on an abstraction we built.

At the moment it was quite simple, a project is mapped one to one to a cluster (and some resources on it). But you could also abstract it in a way in which a project contains many clusters and other set of resources such as shared databases, s3 instances, messages queues, or any other resources in your desired cloud provider. As long as there is a Crossplane provider, you are good to go!

Last thoughts

This was just an example on how you can create and manage resources in different clusters (including the cluster themselves), but there are many ways you could go on about it, and Crossplane will allow you :). I have to say that, already with the few resources defined in my composition, it was starting to get hard to navigate because of the size of it. Depending on on your setup, but I think it might still be beneficial to create some helm charts at the lower level so you can still use your main config values and use them within the Crossplane composition to configure all other resources accordingly. Question is, when does it get too meta? I don't know, but I am here for the ride.

While I still have to see a real Crossplane based platform in action on production, the things included in this post, and others I am learning along the way, make me very excited about this framework.